Formal evaluation methods:

their utility and limitations1.

C. le Pair

STW - Technology Foundation (Utrecht, The Netherlands)

Science and evaluation

Most scientists tend to become uneasy when the subject of 'evaluation' comes up. In a way, this

is surprising, because science itself is a continuous process of evaluation. A scientist builds

on knowledge acquired earlier. He checks and double-checks the thoughts, data and theories provided

by his peers, both contemporary and from the past. And if he is any good as a scientist, he does

the same with his own findings and results. The history of science is full of bitter disputes

between famous men and women who falsified, or thought they could falsify, the results of their

colleagues' work, their thoughts and data.

However, in the last few decades a new element has emerged. The layman or bureaucrat, who meddles

with matters that used to be solely the concern of scholars and intellectuals. Let me say something

about my personal experience here. After a career of some eight years in physics, I became a member

of the directorate of FOM, the physics branch of the Netherlands Research Council system. In short,

I became a bureaucrat. This was in 1968, a sad year for Dutch physics because, for the first time

in 23 years, the government did not increase the budget. The president of the Board told me that

they expected me to engage in 'science policy'; difficult choices had to be made. It was the first

time I had ever heard these words. I had to attend committee meetings in which budgets were allocated.

At first, I was highly impressed, because these committees contained virtually all the outstanding

physicists in the country (civil servants of small countries are privileged to have such experiences).

I felt rather shy about my position. What could I add to all the wisdom gathered around those tables?

But after a short while I became uneasy. There was no doubt about the personal merits of the committee

members, but as a group they did not function well. That is, they avoided disputes and avoided making

choices. Over the years, my role became clearer. With all the respect I owed them, I realized that I

had to help them to use their expertise in making choices. I shall not relate here all the attempts,

successes and failures. A number of them have been recorded and published2,3.

Bibliometrics

Of course, we became interested in the possibility of using formal techniques such as publication counts.

In The Netherlands, disputes about the validity and usefulness of such endeavors were as heated as

elsewhere. A few years earlier, Eugene Garfield had begun publishing the Science Citation Index (SCI),

which provided a rather interesting new tool. Instead of simply estimating the relative standing of the

groups that were competing for the scarce funds available, one could now, apparently, look at the volume

of their productivity in the past and get an objective indication of the use that others were making of

their work.

In 1972, citation counting was already popular for evaluation purposes. However, we had serious doubts

about the general validity of this method. In materials science and in thermonuclear research, for

instance, the views that peers expressed to us often diverged considerably from citation counts.

While experimenting with the material, we soon found that we were dealing with a dangerous substance.

The SCI is far from faultless. Its merits and shortcomings have been highlighted by many authors, not

least by Garfield himself. Given the great interest in citations on the part of policy makers, we were

surprised to discover that virtually no attempt had been made to investigate the reliability of

citations as a means of evaluating research, research groups and institutes. We decided that such

a test was absolutely essential. The Board of FOM, which was actually very skeptical about the

potential of bibliometrics, gave us the means to carry out two extensive studies. They were designed

and directed hy the late Jan Volger of Philips and myself. The real work was done by K. H. Chang et

al.4 and by C. J. G. Bakker5.

The first study dealt with research on magnetic resonance and relaxation during the period in which

these fields could be considered of purely scientific interest. The second study covered the R&D on

the electron microscope, that is, a subject of a technical nature.

Both studies yielded valuable

lessons that we were able to apply in policy making. It was not until many years later, when Antony

van Raan invited me to contribute a chapter on these early studies to the second Handbook of Quantitative

Studies of Science and Technology6, that I took the opportunity to

present them to a learned public

of scholars of science and technology, a new branch of social studies that emerged in the 1960s

7. Chang and Bakker used several methods of evaluation, among them

peer review, including written

reviews and cross-interviewing and they looked at textbooks, publications,

citation to patents, patentcitations and honorary awards. Finally they compared the results.

Since the 1960s, many people have used the technique of comparing similar groups by a variety of

methods. Possibly the best known evaluators are Martin and Irvine, who have carried out studies

in several countries. Their first findings, about radio astronomy institutes, were presented at

a conference in Yugoslavia8. In a by no means exhaustive literature

search in 1989, we counted

about 30 papers authored by this productive duo. Among the earlier attempts at evaluation with

a multiple methodology, we should mention the work of Anderson, Narin and McAllister9.

These techniques have now become standard practice.

Lessons from the Evaluation of Evaluation

Lesson 1. When evaluating research, one obtains the best results by applying different evaluation

techniques simultaneously. In the case of decisions concerning the allocation of funds, evaluations

comparing the achievements of groups or institutes that are similar to each other generate the least

controversy. The subjects to be compared should be, as far as possible, of the same nature.

Lesson 2. Chang's work led us to the conclusion that the bibliometric evaluation technique of

citation analysis is highly reliable as far as a purely scientific subfield of physics is concerned.

The technique was tested so thoroughly and extensively and the results were so consistent that we

feel justified in generalizing: Citation analysis is a fair evaluation tool, for those scientific

subfields where publication in the serial literature is the main vehicle of communication. The

method is cheap to administer and fast.

Nevertheless, citation counts should not be used on their own. Results should also be discussed

with peers in the field, and policy should not be decided upon before the groups involved have

had a chance to check the outcomes of the studies. There are too many pitfalls and possible sources

of error and injustice for citation counts to be relied upon completely. As the father of

quantitative studies of science and technology, Derek de Solla Price, used to say: 'Bibliometric

studies may help to keep the peers honest'. And - I would add - frank.

Lesson 3. In the case of a technological subject – the electron microscope – SCI analysis proved

to be insufficient for evaluation purposes. This conclusion was based on analysis of patents and

patent-citations as well as peer review, which gave additional valuable information. The outcomes

of citation studies were often at variance with the consensus resulting from the other techniques.

Reliance on citation studies alone would have heen misleading.

We feel justified in generalizing this result also: In technology or applied research, bibliometrics

is an insufficient means of evaluation. It may help a little, but just as often it may lead to

erroneous conclusions10.

The 'Citation Gap' in Applied Science

We were tempted to elaborate on the subject. Many fans of bibliometrics were not too pleased

with our conclusions. There is a tendency among the adherents of bibliometrics to oversell the

potential to authorities in an attempt to acquire research contracts. We had demonstrated

shortcomings. We had proved that technological work could lead to a lack of bibliometric

recognition, but we felt that we would only really contribute to the understanding of the

'citation gap' in technology if we succeeded in measuring it. This may seem an impossibility:

to measure the number of citations that were not given!

It occurred to us that the outcome of the intellectual activity that we call research, ought to

be a document, a piece of work that other skilled people can decode and understand. This is a

more general notion than the conventional idea of books or scientitic papers as the output of

research. Archeologists may not be surprised at the idea, but in most other fields of science

the thought is new. In particular, if the outcome of research is a new or improved scientific

instrument that can be attributed to certain identifiable 'authors', there is a 'document' that

we could try to trace. When someone uses the instrument in his research and mentions this in

his scientific papers, we are dealing with a true 'citation'. The electron microscope developed

in The Netherlands provided us with an opportunity to measure the citation gap. The work was

done by W. P. van Els and C. N. M. Jansz. and published in 198911.

The history of the electron microscope is as follows. In 1923, De Broglie postulated that every

particle has wave properties and that every wave can behave as a particle. At about the same time,

Gabor and Busch developed detection systems for electrons and found that a magnetic field can

influence a beam of electrons in the same way that a glass lens affects a beam of light. Although

these two ideas originated in totally different fields of science, they were eventually combined

into electron optics. It is to the

Citation counts of the main authors of EM200, EM300 and EM400.

| Auteur | SCI | Instr.cit. |

|

W.H.J. Andersen

S.L. van den Broek A.C. van Dorsten J.B. le Poole C.J. Rakels J.C. Tiemijer K.W. Witteveen |

9

0 2 11 2 0 0 |

2275

1470 1470 1470 6335 6335 4830 |

Citation counts for the main 'authors' of the electron microscope

in The Netherlands for the period 1981 - 1985;

it compares 'normal citations' taken from the Science Citation Index (SCI)

and textual citations of the instruments (Instr.). The latter were obtained

by extrapolating from the data obtained from a sample of 727 papers.

credit of Ruska and Von Borries that they accomplished this feat in the early 1930s, when

they built the first electron microscope. This outstanding development was fully recognized

only in 1986, when Ruska was awarded the Nobel Prize.

In The Netherlands, research in electron microscopy began in 1939, initiated hy Le Poole

at Delft Technical University. The success of this group soon led to collaboration with the

Philips Research Laboratory and with the Organization for Applied Scientific Research, TNO.

The three then worked together to develop a practical microscope. First of all, they

produced an experimental 150 kV prototype. This was followed by a commercial instrument,

the Philips EM100. Both types incorporated a number of important innovations developed by

Le Poole. Further developments led to the EM200, which appeared on the market in 1958. This

was the first electron microscope to threaten Siemens' hegemony in the field. More than 400

of the EM200 models were built before production ceased in 1965. The successors, the EM300,

introduced in 1966, and the EM400, available since 1976, were even more successful.

For practical reasons, we restricted our study to the EM200. EM300 and EM400. Most of

these instruments are still in use today and it is known who made the principal contributions

to their development. Philips agreed to provide the names of those who had purchased the

three instruments. This unique sign of trust and confidence cannot he overestimated; without

it we could not have carried out this study. Using standard sampling techniques. we selected

a number of purchasers whom we asked for lists of their publications. This provided us with

a database of 5,073 publications, from which we selected, again by random sampling, 727

papers which our team actually read. Table 1 contains information on the citation counts

of the main authors of the electron microscopes. We have compared the normal citations

in the scientific literature, as provided by the SCI in the period 1981-85, with these

found by us as textual citations of the instruments (instr. in Table 1).

Garfield12 lists the 250 most cited primary authors

in the 1984 SCI. Their citation rates range from 448 to 11,009. The average annual

citation rates for our authors range from 294 to 1,267. Thus, with the exception of Le Poole,

Van den Broek and Van Dorsten, the 'authors' of the three microscopes fall within the 250 most

cited in the SCI of 1984. Even the score of the three others is high; one should realize that

'the publication' they authored originated in 1958 and was thus a so-called 'citation classic'

by 1984. The SCI figures that we used refer to the work of an author in its entirety, whereas

our study considered only three instruments. In the case of van Dorsten and Ie Poole in

particular, references to other work would lead to considerably higher counts. The estimates

presented are conservative, but nevertheless the size of the citation gap is striking. All

the scientists involved belong to the citation ranks in which virtually all Nobel Prize

winners are found.

We have explored other fields of technology in a more or less similar way. Again, we

have chosen topics for which we have access to the necessary but otherwise inaccessible

background information. These topics include the development of the storm surge barrier

in the Eastern Scheldt, a revolutionary civil engineering innovation, and the stabilizers

developed by the Technical Physics Institute (TPD) in Delft. Some of these studies have

been published. They all confirm the general pattern: innovative technology leading to

artifacts and with a follow up in normal science are bibliometrically nearly invisible.

In the case of the Eastern Schelde, we conducted a search in various literature databases.

The results are shown in Table 2. The authors with higher publication and citation counts

all work in more fundamental areas. The bibliometric invisibility of the technologists

cannot be attributed to lack of written material, but to the nature of the documents that

they produced. Numerous reports were produced in-house in many of the organizations involved

in the project. The reports are accessible, but are not considered by data services as part

of the open literature. Many reports are written in Dutch. Last but not least, many of the

written documents we traced did not even bear the names of the authors. Here, we touch again

upon important cultural differences between scientists and

technologists13. However, several

of the non-cited authors have been awarded high scientific honors for their contributions to

the barrier.

Citation counts: storm surge barrier.

| name | field | 1 | 2 | 3 | 4 | 5 |

|

Agema d'Angremont Awater Bijker Van Duivendijk Engel De Groot Heijnen Huis in 't Veld Leenaarts Lok Nienhuis Van Oorschot Saeijs Spaargaren Stelling Verruijt Vos Vrijling |

pd co sh co ci pm sm sm co ee me mb co ec gt he sm ci pd |

2 6 0 66 0 0 2 0 1 0 2 168 8 29 1 12 109 2 5 |

0 0 1 2 0 0 2 0 1 0 0 25 1 5 0 5 5 1 0 |

0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 5 0 0 |

1 5 0 6 0 0 1 5 0 0 0 4 2 3 1 4 12 4 2 |

0 3 3 10 2 0 1 4 1 0 0 18 4 7 0 1 17 2 2 |

Citation (1) and publication (2) counts taken from the Science Citation Index. Publication counts taken from the bibliographic databases INSPEC (3), COMPENDEX (4) and PASCAL (5), for some of the contributors to the Storm Surge Barrier in the Eastern Scheldt. Names of principal contributors are underlined. We distinguished the following fields: pd = probabilistic design, co = coastal engineering, sh = soil hydraulics, ci = civil engineering, pm = program management, sm = soil mechanics, ee = electrical engineering, me = mechanical engineering, mb = marine biology, ec = ecology, gt = geotextiles, he = hydraulic engineering.

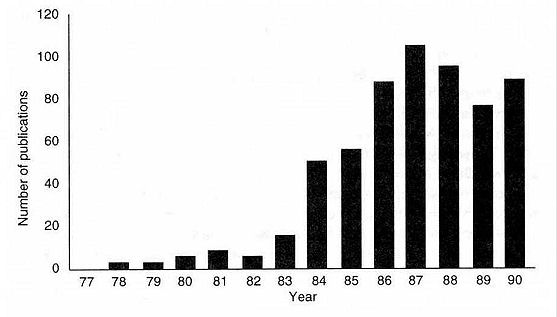

In the case of the stabilizers developed by the TPD in Delft and used in the astronomical satellite IRAS, we are dealing with a unique precision device, without which none of the IRAS measurements would have been possible. Figure 1 shows the number of publications found in the scientific databases with `IRAS' as the search-word. None of these originates in the technical sciences. They are all astronomy papers, none of which refers to the new stabilizers. Interestingly enough, the technologists of the TPD themselves were totally unaware of their citation gap!

Figure 1.

Publications with 'IRAS' as search-word.

There are several options for further studies, among them the developments around

ARALL/GLARE, a new construction material for aircraft, and modern developments in micro-electronics.

An interesting example from the latter field is electron migration, the phenomenon in which the

momentum of electrons in current carriers causes displacement of the lattice atoms in the

carrier. This effect has been known for some time and is acquiring increasing significance.

With the shrinking of circuits, miniaturization, and the increase in current densities,

research is intensifying. However, we are seeing a marked decrease in publications and citations.

It is a matter of competition and commerce, of course. The higher the value of the research,

the less likely it is to become a citation celebrity.

The main actors in the Delta Project – the unique civil engineering project of which the

storm surge barrier was a major part – have not shown any craving for fame or acknowledgment

via publications. Many of them have simply forgotten that much of their early research was

actually written up and is still available to someone prepared to search hard for it. Anyone

contemplating a similar endeavor can examine the documentation – all twelve kilometers of it -

and talk with the engineers, who are willing to tell all they know. He should bring a cubic

meter of notebooks with him. But better still, he should hire some of the engineers as

consultants, as many governments do. Just as in other branches of science, when you want to

start a productive scientific institution you do not begin by building up a library. First

of all, you try to hire good scientists. And in this field you will not find them by counting citations.

The Utility of Formal Evaluation Methods

Despite all my reservations concerning formal evaluation techniques, I do not suggest that

they be done away with. In those branches of science where all the players agree that

publication in the serial literature is the major form of communication, citation counts

are powerful policy aids.

Again, a story from my personal experience. As an administrator, I soon discovered

that if you ask people who is an expert in such and such a field, they have a tendency to

cite persons who are their own age or older. When I did some homework first, fiddling

around with our citation data, and I mentioned some rising stars, the reply would often

be something like 'I'm surprised you know him. He is indeed an interesting up-and-coming

scientist. He wouldn't be a bad choice.' Among conservatives, I found it would be better

for me to bite my tongue off than to say I got the idea from my citation records, since

this often elicited considerable scorn on their part.

Our knowledge of publication and citation records has really improved the composition

of our committees and the nature of our advisory apparatus. Previously, we sometimes came

up with the name of someone whose only contribution to science had been the editing of

conference proceedings or a book with tables or other data sources. Sometimes the suggested

expert had died years earlier. We have also used publication and citation data to evaluate

candidates for promotion or senior appointments. But in all these cases, we have avoided

relying on citations and publications alone and have used them simply to help us formulate

questions for peer groups. We have never allowed formal evaluation to be the decisive criterion.

The group led by A. F. J. van Raan and H. F. Moed at Leiden University has made extensive

use of bibliometric material in the evaluation of research groups. They also have taken care

to avoid the many mistakes that rough citation counts may cause. They have never used their

results in policy recommendations to the board of the university or to faculties without

first acquainting the groups with their preliminary findings. By doing so, they have acquired

a good reputation in the faculties, and their studies have been instrumental in bringing about

budget shifts and the reallocation of personnel. Their performance recently won them recognition

from the Central Research Council in The Netherlands, NWO, which has given them a contract to extend

their studies to other universities.

I have always opposed or discouraged the use of bibliometrics for the evaluation of work

in technical universities. The technological branch of the Netherlands Research Council is

the only branch whose annual report does not list the publications that stem from the projects

which it supports. The board is favorable to publications but only if they do not impede the

major task – that is, the transfer of knowledge to quarters that can make use of it in a practical way.

The board maintains an active patent policy. Patents are, of course, publications in their

own right. But we all know that such publications do not increase a person's scientific visibility.

Patent citations are rare, and it is certainly more difficult to get reliable statistics. F. Narin

and his colleagues at his small company, Computer Horizons, are doing interesting work on patent

statistics and patent citations. Such work provides some insight into the strengths and weaknesses

of technology at the national level. It may also help companies with substantial patent portfolios

to evaluate their acomplishments and those of their competitors. But this work is of little use in

the systematic evaluation of research groups in academia.

Technology is not confined to technical universities. Many groups in regular universities and

in public research institutions, that have been set up for basic science, do interesting things of

an applicable nature. Whether they perform well as technologists depends just as much on the

quality of their work as on the effectiveness with which they succeed in transferring their

results in a practical way to their 'users'. When evaluating their output, one should bear

in mind that knowledge transfer other than via scientific papers requires considerable effort.

This effort takes time, is labor intensive. and cannot be traced bibliometrically. It could be

very damaging to such groups or institutes if policy towards them were determined on the basis

of their publications and citations alone.

Proposal Evaluation by STW

Grant requests to STW, the technology branch of the Netherlands Research Council, can be submitted

all year round. Applicants must have a tenured position in a Dutch university. Grants may cover all

direct costs for research such as salaries for additional personnel, materials, instruments and travel.

Proposals differ from similar proposals to other research councils in that they have to contain a

paragraph in which applicants explain how they intend to promote the outcome of the research for

practical use outside the particular field of science in which they work.

Program officers of STW send each proposal to five or more referees active in public and private

research related to the proposal, with the request that they explain, why they think the proposal is

good or bad and whether they think the proposed utilization is wise and, if so, why. Suggestions for

improvements are encouraged. The anonymous comments received by STW are forwarded to applicants, who

may comment and answer questions.

When 20 proposals from different fields of technology have been reviewed in this way, STW appoints

an ad hoc lay jury consisting of 11 people from universities, other government institutions, and private

companies. They receive the 20 proposals with the referees' comments and those of the defendants, and

they are asked to assign each proposal two scores – one for scientific merit and one for the likelihood

of useful utilization. In the final rating, the two marks are given equal weight and combined. (In

reality, the jury consultation is a two-round DELPHI procedure in which there is opportunity for

question and answer. However, juries do not meet, and only valid arguments are distributed among

the members.) STW usually funds the top eight of the 20 proposals.

STW has great confidence in this system, which has been in operation since 1980. Elsewhere, we

have reported on the internal consensus and the consistency of the juries'

judgements14. Recently,

we have obtained reliable statistics about the predictive value of the juries' judgments concerning

the likely applicability of research results and the ultimate real outcome of

projects15. They show

that juries do have great predictive talent.

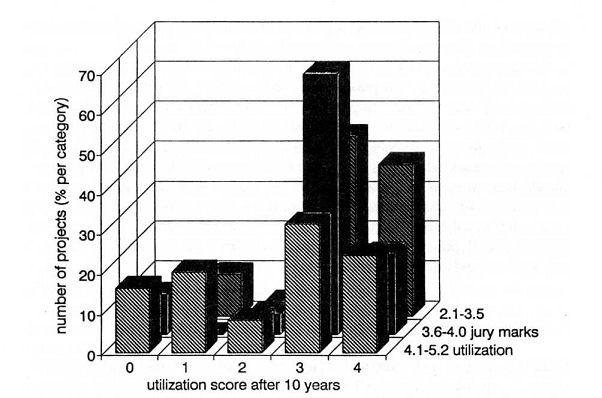

Figure 2.

Relation between juries' judgements regarding utilization of proposals and

the ultimate outcome of research projects after 10 years.

Figure 2 shows the relation between the juries' judgments concerning utilization of the

research proposals and the outcome of the projects that were funded. The projects are divided

into three groups of about equal size: these with jury scores of 2.1—3.5, 3.6-4.0 and 4.1—5.2.

The lower the figure, the higher the utilization merit attributed to the proposals. Proposals

that scored worse than 5.2 are not included, because they were not funded. In each of these

three categories, the figure shows the percentage of projects which, after a period of 10 years,

ended in a certain utilization category. (The sum per jury group = 100 per cent.) We waited 10

years after the start of the projects before carrying out this evaluation, since this seemed to

be the time needed to draw valid conclusions concerning the ultimate use of the type of research

that STW funds. The utilization categories to which projects were assigned at the end of the

ten year period were as follows:

4 A private company is commercializing the results of the project. STW and the company have

signed a contract, involving royalty revenues for STW.

3 A company is using the research results in products or processes; there is no contract,

though there may be occasional revenues.

2 A private company is using the results, for example, in further R&D, or in the form of

information used in investment decisions: the outcomes of the work showed that a certain

line should not be pursued. (Also a valuable time- and money-saving result of a research

project.) During a certain stage of the research, a private company has substantially

contributed to the STW project. (If STW was wrong in its judgement, so were the potential users.)

1 As in category 2, but without user contribution.

0 No private firm uses the results.

It should be noted that this last category also includes projects that did find a user

in the non-profit sector, such as public health or state civil engineering works.

It is clear from this figure that the grant selection procedure correlates strongly with

the final outcomes of projects. Currently, we are trying to investigate the validity of

jury judgements concerning the scientilic quality of research projects, using comparisons

with bibliometric evaluation techniques.

While copying this article from the original document

to include it in this website, some minor corrections

have been made, Nieuwegein, 2008 08 27.

The author retired from STW.

Notes and references

&

M.S. Frankel & J. Cave: Evaluating Science and Scientists, Centr. Eur. Univ. Press Budapest 1997.